1

1Learning the Internet of Things with

WaterElf, unPhone and the ESP32

Iteration 8 (for Q1 2025, 8.1678.2025-04-23; PDF version; plain HTML; unphone.net).

Copyright © Hamish Cunningham. Licence: CC-BY-SA-NC 4.0. (.js: Christan Hare.)

This book covers Sheffield University’s The Internet of Things course iteration 8, running in Spring 2025. It is intended for students of that course, but should also be useful to anyone studying the IoT and its expression in electronic devices based on microcontrollers in general (and the ESP32 family in particular).

Each chapter begins with general discussion, history or theory, then finishes with instructions for a week’s worth of practical work. Although later material depends to varying degrees on preceding chapters, we have tried to make the order of the practical work as flexible as possible so that you can learn in whatever sequence is convenient for you.2

(The practical work in this chapter and next, though, covers enrolling on the course and setting up your development environment, so please prioritise its early completion!)

So, what is the IoT and where does this course fit in? Read on…3

You made it! You’ve worked hard for years to get this far, and now you get to play :)

As computer scientists and software engineers we spend much of our time with machines whose secrets are hidden beneath multiple layers of abstraction: BIOS, bootloader, operating system, programming language, library API, protocol definition, SDK4, etc. etc. These layers allow us to build systems of incredible power and sophistication, but they also (intentionally) obscure the operation of the underlying hardware engine. This course is a chance to rip the top off the box of tricks, hotwire the starter motor and delve deep into the grungey secrets of the electronic wizardry that makes our discpline possible.

The course is also a way to understand how the latest great wave of technological transformation is streaming into our lives, enabled by the combination of tiny connected microcontrollers, cyber-physical systems and ubiquitous networking. This transformation is driven by the same logic as the explosive consequences of TCP/IP and HTML, but if the last 20 years were about the web, then the next 20 will be about making. Just as always-on connectivity and decentralised production in the virtual world enabled revolutions in creating, sharing and consuming on-line, now the same changes are starting in the world of manufacturing, and the consequences are likely to be massive. (There’s perhaps an 80-20 ratio between economic activity devoted to atoms, or physical processes, in comparison to bits, or informational processes.) In the IoT there’s still a space to get in on the ground floor, and learn how to make with the foundational hardware of the next wave.

Hang on to your hat; the future is a whirlwind!

Actually there are two :(

First, you’re going to be programming a tiny little device that has, in comparison to that shiney laptop on your desk or that smartphone in your pocket, close to zero computational resource. There’s no paging, for example, so in the sense of time sharing (first developed in the 1960s) our devices don’t even run an operating system! This can be a challenge. Be prepared.

Second, ideally you’re going to need to install an SDK of one sort or another on your own machine, because you need to be able to spend concentrated hours playing with the beasts, and there’s nowhere better than your own computer for experimenting.

To help you with these challenges we will:

If you don’t have a machine that you can use for this and need something cheap, you might try a Raspberry Pi 4 or better (preferably an 8GB models), which are very capable of running the basic IoT SDK that we work with.

If you’ve already got a machine running MacOS or Windoze don’t panic:

And: look on the bright side, the more you do, the more you learn :) (And: DevOps is a thing.)

Actually I lied: there are three catches:

As the perpetual beta style of service-oriented computing moves into physical spaces, we’re all at the mercy of suppliers’ willingness to use us as guinnea pigs – only this time our homes and our clothes and our handbags are the venue of choice.5 Will the future of the IoT be dominated by botnets and fraud? Or will we use the new machines to help face up to the massive survival challenges that a finite world poses to an expansionist system? I think you’re part of the answer.

As humanity’s latest pandemic swirled across the world, wreaking havoc on the poor, the old and the unlucky (and giving the powerful their latest excuse for moulding us all into yet more profitable shapes), some small comfort could be found in the contemplation of the intricate. The history of machines is part of what distinguishes our species: cooperating to create the preconditions of our existence using ever more sophisticated engines.

Computational engines have brought a new level of generality to the picture: Turing machines exemplified by von Neumann architectures and running on some millions or billions of transistors have become a universal mechanism for information processing and automatic control, and collectively we are deploying more and more compute power, memory and storage at a truly astonishing rate. The extreme expression of this tendency is cloud computing, to the degree that it is now hard to imagine a compute problem which would exceed the combined power of the modern cloud. (ChatGPT is a striking example of the revolutionary consequences of coupling this power with the accumulated data of several decades of web and social media data collection. Not a general AI, but something that sometimes does a very convincing imitation of one!)

At the opposite extreme from the general purpose computer are those engines that are tailored (ever more precisely) to a specific purpose. These machines, in the limit, form minimal solutions to complex problems. There are few fields that exhibit this minimalism as purely as what is now called the Internet of Things6. As computation has permeated almost all corners of technology a particular class of problems has become prominent, where we seek not generality but to consume the smallest viable quantity of resource. The use cases for a general purpose machine are always expanding and we can always, potentially at least, justify the devotion of more resource to their construction. In contrast, the uses case for the Internet of Things are inextricably tied to objects whose sizes, costs and operational environments present a constant resource challenge, and a constant downwards pressure on compute cycles and power consumption.

Computing, then, has two souls: the general and the specific. This book argues that the IoT is firmly part of the latter. Serendipitously, this soul uses as little planetary resource (and our precious attention) as is possible. Reduce, reuse, recycle (and repair, and reclaim, and recover, and… well, the time for action is now!).

The solutions that we build in the IoT are beautiful in their intricate simplicity, minimal expressions of the vast power of our universal machines, and their “…perfection is attained not when there is nothing more to add, but when there is nothing more to remove”7.

Of course we don’t always achieve minimalist elegance on our first tries. Heath Robinson captured the feeling I have when I look at some of my previous attempts at IoT devices:

This course is about transforming our computational selves from high priests of the general purpose to paragons of minimalism (probably via the joyfully messy intermediary of Heath Robinson).

Enjoy the ride!

We will come to the question of how to think about the various types of hardware used in the IoT many times during the course. To begin with, suffice it to say that IoT devices use network-connected microcontrollers. (They may be interfaced to IoT hubs and gateways, which generally use ARM microprocessors, but for our purposes the key innovation and core hardware is the networked microcontroller.)

For this course, we will use a microcontroller called ESP32, which although a relative newcomer has become very popular over the last half decade or so. It is relatively cheap and it’s firmware ecosystem is very open by industry standards. Every student on the course gets an ESP32 development board9, and programming it is a core practical skill that we aim to teach here.

Feel free to read more about the chip now, or wait and see :)

What if we wish not simply for the comfort offered by contemplating (and, later, controlling) the miraculous intricacies of task-specific computational engines, but also for hope?

Take a minute. Switch off your phone. Look at the objects around you, remember the food on your plate at your last meal, feel the warmth of your clothing, the security of the building you are sitting in. The economic system that creates all of these things, all of these preconditions for your existence, is driven by a single imperative that equates profit with value. When that equation is combined with the vast and impersonal forces of transnational corporate competition the results are well known. We use our atmosphere as a sink for the carbon remains of an aeon of decayed flora and fauna. We use each other as expendable “human resources.” We crowd sick animals together in zoonotic melting pots and express surprise at the apocalyptic consequences.

In this somewhat gloomy context there are nevertheless several glimmers of hope connected to the IoT… but allow me, dear reader, a little suspense in my narrative: we’ll come back to how to save the world after we’ve done some of the spade work. Watch this space :)

The course (like life) has two sides, practical and theoretical10. The practical work is split in two halves:

We provide information via three main channels:

(There is also a small amount of administrative information on the University Blackboard page for the course; make sure to check it out.)

There is a notes section for each week of the course12. Each chapter ends with a “week N course notes” section describing our objectives for this part, and specifiying practical work that we want you to complete. By and large you can read the first parts of the chapters in lean-back mode: they are discursive, and intend to give a flavour of the field and its historical and technical contexts. The latter parts of the chapters are more directive, and you’ll need to sit at a keyboard and follow instructions; the intent is to lead you to practical outcomes and running code.

There is example code in the exercises tree of the course materials repository. This code covers many of the tasks that we ask you to complete week by week. You can, of course, copy the answers without trying to come up with your own solution, but you will learn little by doing so. The recommended method is to implement your own answer to the exercises, then go to the relevant example code and compare it with yours.13

This is a 10 credit (level 3) module; this means about 100 hours work. It is taught over one semester, so it averages out at about 7-8 hours per week. (Depending on your existing skills and previous experience you may need more or less time.)

This is iteration 8 running in 2025.

Last year’s course was the best ever according to student feedback, so we’re going to keep most things the same this year.

One exception is that as the global race to spawn trillionaires continues to suck up resources like a mindless black hole, so our own local version of austerity now prevents us from giving away the project hardware as in previous years :(

Luckily we are still able to give away expansion boards for unPhone based projects :)

Other updates are similar to last year:

You lucky things.

IDF and Arduino core versions: we’re still using 4.4.x and 2.0.x releases while waiting for 5.x.x / 3.x.x (the latter will likely need a fair bit of migration work). Versions 2.0.6 (a little old but quite stable) and 2.0.14 (the latest of the 2.x line, bringing with it IDF 4.4.6) of the core should both work.

This was iteration 6 running in 2023.

Changes:

This was iteration 5 running in 2022.

The previous run, iteration 4, with almost no lab sessions, went better than expected :) (The student feedback we collected is available from Blackboard.)

There were a couple of things that glitched a little:

In 2022 we’re making these changes in order to address those two issues:

In addition, I’ve significantly simplified the support tooling (specifically the magic.sh shell script), and added the ability to use the tooling via Docker to make cross-platform working easier.

I hope you like it! (If you do, tell others, if you don’t, tell me ASAP and I’ll try to fix it!)

We needed to change quite a lot from last time the course ran! The 2021 version relies on 3 Ts: Toys@home, lots of Text, and Tech support :)

Threshold and grading works by separating the process of passing the course from the process of attaining a good grade. The threshold assessments check that you have a basic understanding of all the material; the grading assessment evaluates the quality of that understanding in depth.

The course is assessed by two practicals (lab1, lab2) and a (multiple choice) exam. Lab1 happens at week 7 and lab2 at week 12 (and the exam during the standard exam period at the end of the semester). (A mock exam during term will allow you to practice the material.)

You must pass lab1 and the exam in order to pass the course. This will give you a score of 40%. In order to score more than 40% you must also complete lab2, the project.

Lab1 and the exam are assessed as pass/fail only (and no score is given for lab1). A pass in both contributes 40 marks to your final result.

Lab2 is a project which students spend several weeks completing at the end of the course. The assessment is open-ended and qualitative in nature. It is marked out of 100 and contributes a maximum of 60 marks to your final score. Lab2 is assessed in similar manner to Lab1, so feedback from the Lab1 can guide you in performing Lab2 (with the exception that the latter has around four times more time available for your work, and therefore should be commensurately bigger).

This is an advanced course and the project assessment in particular is intentionally open-ended. There is no single right answer! If you’re unsure about how much effort to expend the rule of thumb is to put in as many hours as is reasonable for a 10 credit module (see above; perhaps 8 or 10 hours per week).

We’ll give more detailed guidance in relation to each of the assessments as they occur; the things to remember to begin with are that:

This section describes stuff to do before you start the course:

1. We will communicate with you using Git and GitLab, both to deliver course materials and assignments to you, and for you to submit coursework to us. Therefore you are not fully enrolled for the course until you set up an appropriate git repository and give us the details. Do this ASAP!

2. Spend some time getting to know git and the other tools we’ll be using. (The Linux command line is powerful, elegant and, to newcomers, challenging. Using git from the command line adds another set of challenges. A bit of preparation will help!)

3. Refresh your memory of the Diamond Electronics lab safety protocols. You cannot participate in lab sessions without having completed the safety work.

Details follow.

NOTE: the images below say “2021” in them, whereas you need to use “2025,” and there are a few minor changes in the current version of GitLab.

First register an account on GitLab.com if you don’t have one already. Use your Sheffield email address (ending in @sheffield.ac.uk) to register. (NOTE: be sure to use https://gitlab.com/ and NOT the Sheffield-based GitLab server, which is being phased out.)

Second, create a private repository (project) there (from the “blank project” option) called com3505-student-2025:

(Make sure to select the Initialize repository with a README option.)

Third, go to the Project Information members settings…

…and add hamishcunningham…

…as a project maintainer:

Lastly submit your GitLab user name to our server (see next section).

Your user name (or “ID” for our purposes) is the name you log in with, and appears in the URL of the site when you’re logged in. For example, Hamish’s user name / ID is hamishcunningham and his public GitLab projects appear at gitlab.com/hamishcunningham (and GitHub ones here, and SourceForge here, and Docker images here…).

We need to know your username and match it up with your student record so that we can pull the coursework that you commit there. Tell us your username by filling in the form linked from the Blackboard page.

Note: don’t send your numeric ID from GitLab, but the alphanumeric user name / path that appears in your project URLs. (Mine is hamishcunningham.)

To create and deliver the course we use most or all of the following tools. You can follow the course without using the command line, but we recommend learning it if you can; it is debatable that you’re ever really in control of a machine for which your only interface is a GUI (especially when that GUI is closed source).

It is a good idea to get to know at least Git and if possible the ESP and Arduino tools before starting (though you should be able to pick them up as we go along if needed).

In addition we will need a code editor or Integrated Development Environment (IDE) for writing IoT device firmware in C and C++, e.g.:

We’ll cover how to set up these tools next week.

Parts of the practical work for the course are best done in the Diamond Electronics Laboratory (DIA 2.02), and we have sessions available each week of the course in 2025. You are also entitled to use the facilities in the iForge (the Diamond 1.01 project space, see next section) if you need more time, though do make sure to register with them before trying to gain access.

The electronics lab is a fantastic learning environment, and we give you as much time in there as we can during the course. It is also a potentially dangerous environment. Before coming to any lab session you must do the following:

Soldering is optional this year (but definitely a useful skill for IoT prototyping); if you want to do soldering in the lab, please also watch this tutorial video and see section 3 of this lab intro.

Please follow University guidelines relating to covid-19 at all times!

The iForge is the University’s student-led makerspace that provides workshop and making facilities outside the scope of your degree. If you would like to use the iForge there are a couple prerequisites:

If you would like to find out more about the iForge feel free to check out their linktree or head to DIA 1.01 to find out more in person.

Have you done the preliminaries?? If not, now would be a good time :)

Our objectives this week are to:

The assessment of your practical work on the course is delivered by checking in to your git repository and pushing to the “origin remote” (on gitlab.com). If you don’t push anything, we won’t be able to give you feedback or to mark your work, so it is important to get used to this system early on.

Check out your repository from gitlab into your own file space (the first time you start work on a new machine):

git clone ...url..., for example: git clone https://gitlab.com/YourGitLabUname/com3505-student-2025.gitOther common commands:

git status to tell you what the current state of your file tree is, and git diff to show detailed changesgit add file-pathgit commit -vam 'a commit log message'git pushgit pullNote: git (and GitLab / GitHub) have become industry standards in recent years, so it is important for you to get to know at least the basics. However, an independent survey of experts recently estimated that of 2,153 git command options, fully 213% were either contradictory, confusing or error-inducing (or all three). [I may have made that bit up.] It is depressingly easy to get git into a mess! [I didn’t make that bit up.] What to do? Here’s one way to escape from git hell…

Let’s say you have conflicts in your com3505-student-2025 repository and the process of resolving them is proving difficult. To re-create a clean version of the repository (in your home directory):

cd

mv com3505-student-2025 saved-repo

git clone https://gitlab.com/YourGitLabUname/com3505-student-2025.gitYou’ve now got a fresh copy to work with; if you have changes in the saved version you can copy them over to the new, then commit and push from there.

Yes, it was called ‘git’ for a reason…18

This section describes what to do in your first lab session:

If you finish early you might also want to learn about measuring simple values (using the multimeters and/or oscilloscope), but this is optional.

The hardware kits give you an ESP32 net-connected microcontroller, sensors and actuators, and the means to prototype experimental circuits: the foundations of IoT hardware.

For reference each kit should contain:

(In the second half of term we’ll give you additional hardware for your project as necessary. Enjoy!)

shell or bash, often running in a terminal) to do many of our tasks. On MacOS an old version is available by default; update like this. Ports of these tools are also available for Windows (try Cygwin, Git Bash, Windows Subsystem for Linux, or perhaps an Ubuntu VM or a container via Qemu, LXC or Docker). If you find these tools difficult then please read up on them and practice. See also the links about tools above.When you can measure what you are speaking about, and express it in numbers, you know something about it; but when you cannot measure it, when you cannot express it in numbers, your knowledge is of a meager and unsatisfactory kind: it may be the beginning of knowledge, but you have scarcely in your thoughts advanced to the stage of science. (William Thompson)

Not everything that counts can be counted, and not everything that can be counted counts. (Albert Einstein)

Low cost networked computers are adding eyes and ears (or sensors) and arms, legs and voices (or actuators) to the Internet. These new devices are being connected to on-line brains (big data and deep learning in the cloud)20. This new field is the IoT, of course. Will the result be a ‘world robot’ (Schneier 2017)?! This chapter will start to cover some of the context and history of the IoT. Chapters that follow will cover the hardware that makes it possible, the communications protocols and security systems it relies on, and the cloud-side analytics that make sense of the data it produces.

The practical work this week is to getting to know the SDKs and IDEs21 that allow us to develop software (or firmware22) for the net-connected microcontrollers that are the foundations of IoT devices.

We’ll begin with a couple of definitions, then look at the genesis of the software ecosystem that we’ll be using to program our IoT devices, before moving on to the practical question of development tools.

There are many definitions of the Internet of Things (IoT); one of the most exciting is given by Bruce Schneier in his provocative piece Click Here to Kill Everyone:

…the Internet of Things has three parts. There are the sensors that collect data about us and our environment… Then there are the “smarts” that figure out what the data means and what to do about it… And finally, there are the actuators that affect our environment. … You can think of the sensors as the eyes and ears of the internet. You can think of the actuators as the hands and feet of the internet. And you can think of the stuff in the middle as the brain. We are building an internet that senses, thinks, and acts. This is the classic definition of a robot. We’re building a world-size robot, and we don’t even realize it. (Schneier 2017)

At the other extreme the IoT is about what becomes possible when networked microcontrollers become cheap enough to embed in very many everyday contexts, from central heating thermostats to garage doors. These devices face tight constraints of power usage and cost, and concomitant challenges to their security and functionality. They also quickly become extremely numerous, driving work on big data analytics and cloud computing.

The technology of networked devices goes back perhaps 50 years or more. The coining of the term itself is often credited to Kevin Ashton in 1999, while working at the Auto ID Center at MIT (Ashton 2011), of which more later.

If we understand the past we stand a better chance of seeing into the future. Where did the IoT come from? Where did the systems we’re going to be coding with come from? Later on we’ll learn about an embedded electronics ecosystem that brought the needs of Italian artists together with the origins of the operating system that drives much of the internet and the compiler suite that has been ported to more architectures than any other… and spawned the Arduino.

But first: do you own a phone?

You are very probably carrying a phone, but do you own it?! Call me an old stickler, but I think that if I own something then:

Do those things apply to your phone? How about your laptop? Your tablet? In the 2020s we often pay for electronics which the people who sell then claim we will own, but if we take the trouble to read the licencing documents that accompany them we often find that we have few rights over them, and that repair, for example, is expensive or difficult or voids the manufacturer’s warranty. Much of the electronic and computational ecosystems we’ll spend most of our time with in this course arose from a similar realisation some 50 years ago.

Back when I was a wee small snotty thing, and many of you were only minute folds in the quantum potential of possible future universes, the PDP 10 was a cool computer:

It was the first machine to make time sharing (or multitasking) common, and it had a huge maximum memory of… a whole megabyte!24

The operating system code (assembler) on the PDP 10 and its immediate cousins was routinely shared and improved by a community of programmers (or “hackers”25) at MIT, including one Richard Stallman.

As computing companies became bigger and more profitable in the 1980s practices began to change: there started to be no more access to source code, and users would have to sign an NDA26 even to get access to a binary. Stallman suffered the consequences of an aversion to NDAs when he was refused access to the source for a printer control program, even though his intention was to improve that program. He says: ‘This meant that the first step in using a computer was to promise not to help your neighbor. A cooperating community was forbidden. The rule made by the owners of proprietary software was, “If you share with your neighbor, you are a pirate. If you want any changes, beg us to make them.”’ (Stallman 2002)

’So I looked for a way that a programmer could do something for the good. I asked myself, was there a program or programs that I could write, so as to make a community possible once again?

The answer was clear: what was needed first was an operating system. That is the crucial software for starting to use a computer. With an operating system, you can do many things; without one, you cannot run the computer at all. With a free operating system, we could again have a community of cooperating hackers—and invite anyone to join. And anyone would be able to use a computer without starting out by conspiring to deprive his or her friends.’ (Ibid.)

And this is what lead to the kernel code that runs your Android phone, the GNU/Linux operating system that runs the majority of servers in the cloud, and the compiler code you’ll use to create firmware to run on the ESP32 in this course…

Why does this matter?

And, perhaps most important of all, we have been describing the genesis of the whole free and open source software movement, which has been responsible for huge portions of the code running our world, and which in combination probably constitute the most complex machine ever constructed. Where will it go? Where will humanity go, locked into a triple crisis of pandemic, environment and economy? The two questions are (perhaps uncomfortably) tightly linked.

Ok, enough context for now. We’ll come back to IoT history next week; now for something practical :)

When we write code to run on a microprocessor (e.g. on your laptop, phone, or that Cray you have in the garden shed) we use all the facilities of the operating system, a modern programming language and its library ecosystem to insulate ourselves from the underlying hardware and to provide a set of abstractions, APIs and components that can make us productive at high speed. In recent times we have started adding on-line cloud APIs to the mix, making our potential deployments of computational resource truly vast.

On a microcontroller like the ESP32 in your kits, we have a lot less support: programming is typically with low level languages (C, C++, occasionally bits of assembler), the ‘operating system’ is more like a task management library, and the development tools are often cranky and basic. Firmware usually has to be uploaded to the device over a serial line or JTAG connection, and the tooling to perform this task is different for every hardware family.

In some cases a new world of Javascript or Python programming and drag-and-drop upload (e.g. over USB mass storage class) is starting to become available for the IoT, but:

The second of these points in particular means that projects that need to use the hardware efficiently and to exploit all of the available facilities will most often write in C/C++.

(Not convinced? See Appendix B for how to use Python on the Feather S3 and the unPhone. In fact Python is becoming a good choice for IoT prototyping at least, especially if you already know it.)

This section begins by looking at the basic (toolchain, SDK and library) building blocks of development on the ESP32, then goes on to describe available development environments. Later on (section 2.6.4) will talk you through installing your own development environment and taking your first steps in programming an IoT device.

In any novel embedded system the foundational step is to port a C compiler to the new chip. The compiler, linker and the tools that take a binary image and burn it into the memory space of the device (in a form that can then be sucked up by a bootloader and turned into a running process) are called collectively the toolchain. The compiler is generally an unusual beast, as it has to run on one platform (your development machine, x86_64 perhaps) but produce binaries for a different architecture (Xtensa being the one on the ESP32 in your parts kit, or RISC-V the coming thing); i.e. we need a cross compiler. So when installing a development environment for the ESP32 we need to pick up the Xtensa port of GCC (the GNU Compiler Collection, aka GNU C Compiler).

There’s good news and bad news about this step:

There are snakes in the long grass, wear your wellingtons.

A compiler, when ported to an IoT microcontroller, will open up the magical gates of productivity and allow you to do… not very much at all! The differentiators between the (many) different chips competing for IoT oxygen relate to their hardware facilities, and to access these we almost always need more than is provided by the standard C library. (On the smaller, more resource-constrained devices, the standard library may not even be present due to lack of space; in the case of the C++ library this is actually quite likely.) In order to sell chips, then, the microcontroller manufacturer needs to supply a set of libraries that expose the hardware functionality of its wares in as friendly and powerful a way as possible.

On the ESP32 there are two main layers that we will be dealing with in some detail (and a third which we’ll touch on here and there):

We’ll describe each of these in the rest of this section; section 2.6 below will start us off on the process of installing and running the various SDKs, libraries and development tools that we need to learn.

Espressif, maker of the ESP32, provides an SDK and library set called ESP-IDF, or Espressif IoT Development Framework, whose development is hosted as open source on GitHub (Oner 2021).

ESP-IDF has grown to be a large and successful open source project that incorporates ports of many other libraries to ESP hardware. For example, MbedTLS (source code) is a very popular small footprint cryptographics library (supporting certificate-based security and the TLS protocol that underlies security on the web via HTTPS). Espressif support a port of MbedTLS (documented here, source here) that exploits the ESP32’s hardware acceleration facilities for tasks like random number generation or hashing. This hides the peculiarities of the ESP’s underlying hardware from the programmer, who can instead use familiar and well-documented abstractions as surfaced by the MbedTLS library.

Other libraries that are supported for the ESP32 via ESP-IDF include:

lwip: a complete TCP/IP stackspiffs: filesystem over the SPI protocol (for flash RAM)protobuf-c: protocol buffers serialisation formatmqtt: the MQTT publish-subscribe messaging protocolcoap: the CoAP communication protocolIn each case IDF adapts the library to particularities of the underlying hardware, making it easier for programmers to exploit the chip to its full potential without learning about the grungey details of which register does what under what conditions.

Whenever we write code for the ESP32 we will be using ESP-IDF APIs, either directly or indirectly. To begin with, the easiest way is to use them indirectly, letting the Arduino compatibility layer take the strain (see below).

As of early 2025 there are two main versions of IDF that we will come across:

From version 4 IDF changed its principal build system from GNU Make to CMake, and this has made supporting multiple versions quite complex. (In version 5 GNU Make is deprecated.) There are three scripts provided by the distribution that set up the environment needed for the build systems to work:

flash (to burn firmware) and monitor (to listen on serial); this lives somewhere like ~/esp/esp-idf depending on how you’ve set up the install (~/the-internet-of-things/support/tooling/esp-idf if you’re using the setup scripts supplied for this course)IDF_PATH, the location of the IDF file tree; this also lives in esp-idfvenv (virtual environment) script that sets up PATH etc. to pull in the requisite flavour of Python; this lives in ~/.espressif; a common cause of errors is that the virtual environment gets out of sync with the rest of the IDF install29Each of these has changed and evolved over different versions of IDF, and they present a difficult moving target to IDEs and automation scripts like Eclipse, PlatformIO and VSCode. (It is also a rapidly changing system integrating many 3rd party libraries.) This means that it has previously been quite challenging to get a 4.4.x IDF setup working with the Arduino layer. Luckily this has mostly passed, with the 2.0.6 release of the Arduino core being based on 4.4. We’ll start with 2.0.6 for our work; versions through 2.0.14 should also work fine.

An additional complexity is that IDF provides a configuration tool, menuconfig (based on the KConfig language), originally developed for the Linux Kernel. This exposes many of the optional features of IDF and of the libraries it incorporates. To use it you have to be doing a native IDF build of one type or another (which means you can’t easily use it in the Arduino IDE). Some of its capabilities are mirrored in configuration that is exposed by the Arduino core, but not all.

Another important facility that we can access via the Espressif SDK is FreeRTOS, an open source real time ‘operating system’. FreeRTOS provides an abstraction for task initialisation, spawning and management, including timer interrupts and the ability to achieve a form of multiprocessing using priority-based scheduling. The ESP32 is a dual core chip (although the memory space is shared across both cores, i.e. it provides symmetric multiprocessing, or SMP). FreeRTOS allows us to exploit situations where several tasks can run simultaneously, or where several tasks can be interleaved on a single core to emulate multithreading.

This doesn’t necessarily make life simpler! Most microcontroller code, at least in the hobbyist and maker spaces, is written to target single core chips, and the potential for shooting yourself in the foot rises very quickly when trying to adapt to a world where multiple things may be happening (or at least seeming to happen) all at once. Who owns this piece of memory? What will happen when two tasks both try and talk over this bus? Why does my code behave differently if I pause a task for a few microseconds? To begin with at least, it may be easier to limit yourself to a single task that runs an infinite loop, and worry about FreeRTOS later on. (This is what happens, in fact, when we delegate our main program entry point to the Arduino “core” for ESP32: the setup and loop procedures which characterise Arduino programming are implemented for us using a FreeRTOS task, but we don’t have to worry about the details.)

Later on, interrupts, tasks, event queues, mutexes and semaphores will all become objects of interest, and if you’ve a mind to dive into the mysteries of FreeRTOS that’s where you’ll find them. Enjoy!

Fairly early in the lifetime of the ESP32’s predecessor chip, the ESP826630, a Bulgarian developer going by the somewhat cryptic moniker of me-no-dev decided that what was needed was integration with the Arduino ecosystem. He set out to develop a compatibility layer between Arduino libraries and development tools and the ESP8266. The work rapidly captured the imagination of a large number of developers, and has become a mainstay of the ESP community: nowadays there are commits from more than 500 people in the github repos, and perhaps 10,000 commits overall. Espressif could see that this was a good thing: they hired me-no-dev and he now works full time on the “Arduino core” for the ESP32. The project can be found here.

What’s an Arduino core when it’s at home? I’m not sure where the term originated, but it basically means all the code that interfaces a device and its toolchain, compiler settings, and libraries to a) other Arduino library code and b) the Arduino IDE. This piece of kit is incredibly useful, because the Arduino ecosystem is massive. Pick up any electronic component (sensor, actuator, dog brush or kitchen sink) and the likelihood is that someone has published an Arduino-compatible library for it. This makes programming from the Arduino APIs hugely more productive than otherwise, because we’re almost always working from widely used running code. Win win win!

Motherhood, apple pie, happy ever after. Except: VERSION HELL!!!

When we program the ESP32 we’re typically using C code from ESP-IDF, C code from the libraries that have been ported to the ESP chip family and included as part of ESP-IDF, C++ code that translates between ESP-IDF and the Arduino APIs, and C++ code that exposes sensor and actuator hardware within the Arduino ecosystem. (All of these are in active development, and all have their own release cycles.) We are then compiling this morass with a toolchain that has its own version trail, controlled by a build system that comes in a whole bunch of different and subtly incompatible flavours and, finally, uploading it to the IoT device using a Python script written in … erm, Python (possibly one of the least stable programming languages ever devised31).

Hmmm.

The types of things that tend to go wrong are:

idf.py script from Espressif, custom scripts provided by library developers, the Arduino IDE or CLI, PlatformIO, VSCode, Eclipse, …) expects one shape of file tree, but in the current version things have changedSo: if things go wrong, don’t worry. You’re not alone. Section 2.6.4 tries to map out a path of relatively easy and reliable options. But first, let’s round off this discussion with a look at the available build system CLIs and IDEs.

The game, lest we forget amidst all this gratuitous verbiage, is to (cross-)compile our code against 1001 IoT and embedded systems libraries and burn the resultant firmware images to an ESP32. Along the way it might be nice to throw in a bit of:

We have two main families of options for doing (some subset of) these things:

Examples of IDEs include:

gUw and ZZ and :r! column -t. All the best people use it.Examples of CLI build systems include:

I was talking to someone recently who works at one of the big semiconductor companies (the one that specifies the architecture used by the chip in your phone) about the difference between microcontrollers and microprocessors and remembering all the weird challenges that the former tend to throw at you. “Welcome to embedded,” she smiled, where getting your toolchain installed can be a week’s work :)

We’ve looked a little at the complexity of the development ecosystem for typical IoT (net-connected microcontroller) devices, and mentioned the plethora of support tools that are available just for the device which this course is based on. Before we move on to practical work, a digression on solving the cross-platform problem for these tools.

Many embedded system projects use some variant of Linux as their host environment. Linux is the only widely-used operating system in which predictable and repeatable builds are straightforward, and the only environment where we can be confident that we’re using a maximally open (and auditable) codebase. The support tools we provide for this course (e.g. magic.sh) require the core GNU/Linux toolset to work.

What if we’re forced to use a different platform, e.g. Windows or MacOS? There are many answers, going back to venerable emulation projects like Cygwin or more modern techniques like virtualisation, but the up-to-the-minute answer is to use containerisation. This section revises a little recent history in that field (and touches on the way that continuous integration and continuous delivery have become widespread, partly as a result). Section 2.6.4.4 introduces the docker images most relevant for our purposes.

When Amazon realised (in the early naughties) that no one went shopping in the middle of the night, they founded Amazon Web Services, whose first product was EC2 (the ‘elastic compute cloud’). Those of us working on ‘big data’ analytics and trying to juggle inadequate hardware budgets with mushrooming data sizes38 suddenly realised that instead of buying servers, we could buy time. For us this meant that we could use only what we needed; for corporate users it meant that costs could move from CapEx (capital expenditure budgets) to OpEx (operational expenditure), making a lot of accountants very happy. The whole space mushroomed, and cloud computing became a thing.

How did cloud platforms partition machines for different uses? The technology was based on virtualisation, the movement of compute loads onto Virtual Machines (VMs), which was a big trend for the next decade or so. The ability to spread loads meant much more efficient use of servers.

This was a big step forward, but we still had multiple copies of the operating system (OS) running for each VM. The next step was to solve this using what came to be called containers, starting with LXC (Linux Containers) and becoming most popular as Docker. Containers share a base image of the OS and layer thin (union) filesystems on top. A specification (e.g. Dockerfile) allows recreation of diverse configurations from base images, and it has become very cheap and easy to spin up new images.

One of the unexpected benefits of these trends has been that we now have a straightforward way to freeze a computational environment (operating system plus arbitrary applications) and move it between machines. Hence the current discussion: we can use containers to encapsulate complicated development environments like our IoT build systems and expect them to operate in a constant manner across platforms.

We can also standardise the way we integrate, test and deploy complex projects, which we’ll discuss briefly in the next section.

Why do complex systems stop working? Bitrot! Well, more precisely, we create complex combinations of library, compilers, databases, operating systems, deployment tools, web server runtimes, load balancers… all with their own versions, dependencies and release cycles. If we lose track of all those versions, it can become impossible to recreate, and if dependency evolution leads to incompatibilities, the maintenance task can grow very large.

A second major source of software entropy is that as time goes on all types of systems evolve (“a building isn’t something you finish but something you start” (Brand 1994)), and while adding and changing features we often introduce bugs and regressions.

The modern answer to how we cope with these various types of chaos in our software development lives is often known as CI/CD:

Along with the ubiquity of hosted version control (think GitLab or GitHub or the like), CI/CD has become an industry standard, at least in the open source world. Containerisation plays a vital role in this picture, allowing us to:

Dockerfile, docker-compose.yml, .gitlab-ci.yml …)These facilities are now built-in to the version control hosting sites and many others. For example, the on-line and PDF versions of these notes are generated using a LaTeX Docker image and a CI/CD script with Makefile to pull in the Pandoc text processor. Every time I push a change to the gitlab repository, the book gets regenerated and re-published. Sweet.

To summarise, containers allow us to specify, compose and distribute entire computational environments in a simple and efficient manner. Repository providers like Docker hub facilitate this process and have become powerful tools for developers. The downside is that we are adding yet another layer of complexity to an already complex picture39, and it would be a mistake to expect to fully understand the container ecosystem without putting in significant effort, but when we get caught in the maze of IoT build systems it is well worth trying to see if an appropriate (and trusted!) image exists and giving it a whirl.

Note: if you’re not a Linux command line fan, try a different approach! One good option in 2025 the is docker + web serial approach.

We provide a helper script in the course repo to give you an idea of how the various build systems, IDEs and related tooling fits together. If you’re developing on Ubuntu 22.04 you can use the script directly (using the setup command to get started; see magic.sh -h for more commands). Otherwise, you can check the script’s code for inspiration, or follow the instructions for another platform of your choice. (If you’ve never used the *nix command line or written shell script magic.sh may look like, well, magic. Don’t panic! So long as you know where your towel is, you’ll be fine. Just follow the instructions for your platform that are hosted by the IDE you wish to use.)

Rationale: we would like our ideal build system to do these things:

arduino-esp32) and with ESP-IDFarduino-esp32 layer via esp32-arduino-lib-builder, perhaps using docker)To achieve these things, we have (at least) these options:

makeEspArduino Makefile (for GNU Make)esp32-arduino-lib-builderlbernstone-dockerNone of them do all the things we would like to do, at least not reliably and while keeping pace with the evolution of the ESP ecosystem. (For example, when I wrote this in November 2021, the excellent docker images from Larry Bernstone that encapsulate the complex versionning and rebuild process of the Arduino core are available only for an alpha of the 2.0.0 release, whereas the core itself now has a release candidate of 2.0.1… Fortunately in 2025 things have improved significantly.)

What to do?

The magic.sh script supports several builds using PlatformIOor makeEspArduino, which are largely compatible with the (1.8.x) Arduino IDE. Other examples do more complex tasks, e.g. running an IDE build (with optional lib-builder reconfiguration) in a docker container, then exporting the firmware .bin to the host machine to allow cross-platform burning to devices. It all gets pretty hairy, and tends to be brittle as a result. So I recommend thatyou figure out your ideal build process in easy stages. Start with something very simple (e.g. the Arduino IDE version 1.8.19, or the makeEspArduino CLI), get the hang of building and burning firmware to the ESP, and then add bells and whistles later on as required. In 2025 the docker + web serial approach is also a good choice to start with.

Ok, that’s the end of Chapter 2’s general material. The rest of the chapter gives specific tasks for Week 2.

Our objectives this week are to:

Notes:

Serial.begin and pinMode (in setup), and Serial.println and digitalWrite (in loop); the ESP32 library function getEfuseMac will give you access to the MAC addressFile>Examples; try ESP32>ChipID for an example of using Serial, and Tools>Serial Monitor to see the results)Exercises:

getEfuseMac – how would you fix that?INPUT_PULLUP macro for the switch codecom3505-student-2025):

build and .pio and .vscode as appropriate (see above)git add [any files you created]git commit -vam "a helpful commit message"git pushAs noted in chapter 1’s “how the course works”, there are example solutions in the course materials tree. Have a go at doing your own version before looking at these!

First, to avoid checking in lots of emphemeral build files, add a file called .gitignore to the top level of your repository, containing:

.pio

.vscode

buildTo do this from the command line try something like this:

cd com3505-student-2025

cat <<EOF >.gitignore

.pio

.vscode

build

EOF

git add .gitignore

git commit -vm 'added a .gitignore file'

git pushFrom now on files or directories like .pio (PlatformIO’s build tree) will not be added to the repo or listed in git status requests.

As discussed above there are lots of options, and lots of potential pitfalls, so give yourself plenty of time to do this task, and don’t be surprised if you need to come back to it multiple times to refine your toolset.

I recommend that you start with the Docker/PlatformIO/WebSerial approach, or possibly the Arduino IDE (version 1.8.19, which is old and clunky but reliable). (When using the former you can choose your favourite editor to go with the compile and flash tools, e.g. VSCode or vim or …)

Once you have one of these working, if you wish you might then try PlatformIO in VSCode. The ambitious amongst you might try Eclipse (if you’ve used it before and liked it), or Arduino Create. The important thing this week is just to get one environment running (and if you’re happy with it feel free to stop there!).

Follow these steps:

Blink and GetChipID examplesHelloWorld, from …the-internet-of-things/exercises/HelloWorldSupport for these tools on the University machines is limited to Docker (from 2024 on), Arduino IDE and VSCode. The latter should allow you to install PlatformIO (see 2.6.4.2).

To run on other platforms, I’ve provided a firmware template and shell script in the course materials which may, if you’re running on Ubuntu 22.04 or other recent Debian derivative, set things up for you without much intervention. You can also use this via Docker, or in a VM. See section 2.6.4.3 below.

The rest of this section details how to work with various different IDE and CLI methods. You don’t need all of them, one or two is fine :)

One of the simplest and most reliable ways of programming the ESP32 is using the Arduino IDE. This IDE is a little like me: quite robust and reliable, but a little antiquated. It is a good place to get started, but possibly not where you want to remain for the whole course. Your choice though: if you like it, go with it.

To pick up the compiler toolchain and other specifics we also need to install an IDE plugin known as a core – see above. The easiest way to install the IDE and the ESP32 core is to follow the “boards manager” instructions in the core documentation. Choose a stable release version, and install core version 2.0.6, which should give you IDF release 4.4.3.42 Version 2.0.14, which should give you IDF release 4.4.6 should also be fine.

For example:

boot and pressing (and releasing) reset (see also the Adafruit docs for your board)File>Preferences and add the Additional Boards Manager URLs as described in the ESP32 Arduino core docs: paste in the stable release linkTools>Board>Boards Manager and type esp in the search box, which should now bring up esp32 by Espressif Systems; install version 2.0.6 or 2.0.14 (a several hundred MB download; it took about 5 minutes on a Diamond Electronics lab machine)Tools and

>Board select Adafruit ESP32S3 Feather 2MB PSRAM>Port select the USB connection (probably /dev/ttyACM0 or /dev/ttyACM1 on Ubuntu, or /dev/cu.SLAB_USBtoUART on a MAC, or e.g. COM15 on Windows)File>Examples>01.Basic>Blink and upload (“burn”) to the ESP by clicking on the arrow towards the top left of the IDE; if this works, there should (eventually) be a Done uploading message on the middle bar (beneath the code pane)reset once more and your ESP should start blinking its red LEDCongratulations, you have successfully burnt your first ESP32 firmware!

Another useful example to try at this point is GetChipID:

File>Examples>ESP32>ChipID>GetChipIDSerial Monitor window (from Tools)If you got that lot to work, you have:

Probably time for a brew!

Didn’t work? The most common problem at this point (and it is, sadly, a new one that recent boards have brought to the table) is getting the right sequence of boot+reset/burn/reset, followed by flash, followed by another reset, followed by opening a serial monitor on the correct port. The sequence needs to go like this:

/dev/ttyACM0 on Ubuntu or COM9 on Windows). Select this port in your IDE (from the Tools menu for Arduino).boot button, press and release reset, then release boot.

boot/reset sequence; you may need to do this several times!File>Preferences enable verbose messages for upload. This may give a clue to e.g. port problems. Or try unplugging your board, shutting down the IDE, restarting your machine then trying again!C:).magic.sh setup on Ubuntu, for a quicker download try checking out commit f69bbfe in the-internet-of-things/support/tooling/Arduino/hardware/espressif/esp32 (Arduino) or the-internet-of-things/support/tooling/platformio/packages/framework-arduinoespressif32 (PlatformIO)HelloWorld platformio.ini: platformio/tool-esptoolpy@1.40300.0 (in the platform_packages section).pio run -t upload -t monitor, after a successful flash you may need to wait until the --- Terminal on ... messages appear before hitting reset in order to ensure that the serial monitor works.Older boards with extra UART control chips manage this process better, but to support Python (see chapter 14) and the UF2 bootloader, and to exploit the ESP32S3’s built-in USB capabilities, the new boards have gone back to the boot/reset style. See also the relevant discussion of Arduino IDE firmware uploading, native USB glitches and entering bootload mode in Adafruit’s board docs.

In 2025 VSCode with PlatformIO is probably the most popular fully-featured development environment for programming IoT devices.

To install, follow the PlatformIO plugin instructions.

The sequence goes like this:

HelloWorld from the course repo, so you may wish to clone (or download and unzip) that nowEXPLORER tab at the top left; click on platformio.ini, and the IDE will install the toolchain (which takes a few minutes)PlatformIO: Upload)Show All Tasks...PlatformIO Upload and Monitor (adafruit_feather_esp32s3)pio run -t upload -t monitorSee above for port related issues. Others:

platformio.ini and you should be good to go.NOTES:

magic.sh script contains a lot of stuff that you almost certainly won’t need (e.g. devops docker manipulation, Arduino IDE stdout parsing, VSCode in docker setup, etc. etc.); only go there if you need to!In our the-internet-of-things course materials git repository you can find directory trees called support and exercises/HelloWorld. These contain example firmware code (functionally similar to the Blink example supplied with the Arduino IDE) and a script called magic.sh that (if it works) could simplify your install and CLI build work. To use magic.sh:

# the best place to keep this stuff is in $HOME, so:

cd

# get a working copy of the main setup and build script:

wget https://gitlab.com/hamishcunningham/the-internet-of-things/-/raw/master/support/magic.sh

chmod 755 ./magic.sh

# clone the course repo and the unphone repo if you want copies:

./magic.sh clone

# we don't need the first copy of magic.sh any more as the cloned repo has one:

rm ./magic.sh

cd the-internet-of-things/support

# download the various development tools we'll be using:

./magic.sh setup

# if you want to install the Arduino IDE:

./magic.sh arduino-setupYou should now have a directory called something like /home/yourname/the-internet-of-things/support/tooling containing the Arduino ESP32 core, the Arduino IDE (with sketchbook and preferences directories), PlatformIO CLI, makeEspArduino and (one or more versions of) ESP-IDF, and also directories called unphone and unPhoneLibrary containing library code.

To do a command-line build of a conventional .ino sketch, first put your Feather into bootloader mode by holding boot and pressing reset, then try:

cd the-internet-of-things/exercises/HelloWorld

../../support/magic.sh pio run -t upload -t monitorWhen the upload has finished you may need to press reset again to load the new firmware. If all is well you should see something like

ahem, hello world

IDF version: 4.4.3

ESP_ARDUINO_VERSION_MAJOR=2; MINOR=0; PATCH=6

...

ARDUINO_BOARD=Adafruit Feather ESP32-S3 2MB PSRAMTo take a copy of one of the examples, use the copy-me-to command from inside the example. E.g. if you have your gitlab repo checked out in your home directory, this would create a new tree there:

../../support/magic.sh copy-me-to ~/com3505-student-2025/MyNewExampleFor more details take a peak at the script or try

.../magic.sh -h

.../magic.sh -HYou can also do this via Docker: see next section.

This is a reliable and repeatable method that works cross-platform, and gets around Windows and MacOS problems with serial port access. This section will use Windows as an example; see next for more details on how to do this on Linux or MacOS.

This method has a three step process:

.\build.ps1firmware_merged.bin from the previous step then resetInitial configuration can be a bit of a pain, but afterwards the process should be very reliable.

platformio.ini file)merge_bin.py script in the top level of that projectNote: this method doesn’t play well with network drives on Windows; you can’t do this on the U: drive in Sheffield, for example. So put your files in a local directory, e.g. cd $env:localappdata (which will take you somewhere like C:\Users\YourName\AppData\Local) first and work from there, e.g. git clone https://gitlab.com/hamishcunningham/the-internet-of-things and then cd the-internet-of-things/exercises/HelloWorld.

PS C:\> docker -v; I’m running Docker version 24.0.7docker run hello-world; if you get an error like “command not found,” or docker: error during connect ... docker daemon is not running install docker desktop if it isn’t already on your machine (I’m running 4.26.1), with WSL supportDocker Desktop Privileged Helper to make changes to your device (you’ll need to enter your password)docker run hello-world from powershell:PS C:\> docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

c1ec31eb5944: Pull complete

Digest: sha256:4bd78111b6914a99dbc560e6a20eab57ff6655aea4a80c50b0c5491968cbc2e6

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

...All good, we’re ready to build :)

Now navigate to the directory containing your project. (If you don’t have one, try our HelloWorld example to get started. GitLab will download just that directory for you if you click on “code” and select “zip” at the bottom.)

PS C:\> cd .\the-internet-of-things-master-exercises-HelloWorld\exercises\HelloWorld\

PS C:\the-internet-of-things-master-exercises-HelloWorld\exercises\HelloWorld> ls

Directory: C:\the-internet-of-things-master-exercises-HelloWorld\exercises\HelloWorld

Mode LastWriteTime Length Name

---- ------------- ------ ----

d----- 22/01/2025 12:11 sketch

-a---- 22/01/2025 12:11 59 .gitignore

-a---- 22/01/2025 12:11 1355 merge_bin.py

-a---- 22/01/2025 12:11 419 build.ps1

-a---- 22/01/2025 12:11 1131 platformio.ini

-a---- 22/01/2025 12:11 2755 README.mkd

...Finally, let’s do a compile: .\build.ps1. With luck and a prevailing wind this should create a file called something like .pio/build/adafruit_feather_esp32s3/firmware_merged.bin which we can now flash to the device using the WebSerial ESP flashing tool from Adafruit.

If you get an error like this:

PS C:\this-and-that\the-internet-of-things-master\exercises\HelloWorld> .\build.ps1

.\build.ps1 : File C:\this-and-that\the-internet-of-things-master\exercises\HelloWorld\build.ps1 cannot be loaded. The

file C:\this-and-that\the-internet-of-things-master\exercises\HelloWorld\build.ps1 is not digitally signed. You cannot

run this script on the current system...The fix is to run Unblock-File:

PS C:\this-and-that\the-internet-of-things-master\exercises\HelloWorld> Unblock-File .\build.ps1(If you then want to monitor the serial line try tools like the Arduino IDE’s Tools>Serial Monitor or command-line tool like tio, or PlatformIO core’s -t monitor option.)

When you finally get it working it will create a firmware_merged.bin file:

PS C:\this-and-that\the-internet-of-things-master\exercises\HelloWorld> ls .\.pio\build\adafruit_feather_esp32s3\

Directory: C:\this-and-that\the-internet-of-things-master\exercises\HelloWorld\.pio\build\adafruit_feather_esp32s3

Mode LastWriteTime Length Name

---- ------------- ------ ----

...

-a---- 03/02/2025 17:07 4194304 firmware_merged.bin

...We can now use this to burn to the ESP32 using a web flashing tool:

espressif.github.io/esptool-js/

Note that this tool initialises with the flashing address set to 0x1000 but you need to write the merged file at 0x0, so change that before flashing.

Alternatively adafruit.github.io/Adafruit_WebSerial_ESPTool/.

Finally, here are some more details of using the magic.sh script with docker on Linux.

Espressif provide a Docker image for ESP-IDF, and I’ve also prepared an image that bundles the Arduino ESP32 core, Platformio CLI, the course git repositories and various bits and pieces. You should be able to install docker on your own machines and get working straight away, but there are two caveats:

docker run --device=/dev/ttyUSB0:/dev/ttyUSB0, but on Windows the equivalent way to access the COM ports is difficult and unreliable. This means that after compiling with the container you will need to run a separate burn command on the host machine.To run a PlatformIO CLI build, for example, try:

# cd to a directory on your docker host containing .platfomio:

cd ~/the-internet-of-things/exercises/HelloWorld

# run the iot:pio image:

docker run -ti --device=/dev/ttyACM0 -v $PWD:/home/ubuntu/project \

hamishcunningham/iot:magic

# cd into the mapped host project directory:

cd project

# compile the sketch:

pio runIf you’re on Linux you can flash and monitor firmware from the image using a flag like --device /dev/ttyUSB0. From MacOS or Windows quit the image after building and search for firmware.bin in ./pio after building. Then use some local utility (e.g. idf.py) or a web serial tool to flash.

As usual the magic.sh script has some convenience commands to get you started:

magic.sh -D: when run from a directory containing sketch/sketch.ino this command will run the course docker imagepio run -t upload -t monitormagic.sh -D pio run -t upload -t monitorThere are lots of gotchas when developing with Docker; e.g. by default any changes you make to a running container (like triggering PlatformIO to install an XTensa toolchain) will be lost when you quit that container. It is a very powerful tool, and becoming an industry standard for operations, but be aware that there will be a learning curve :)

Note: if you see an error like

~/the-internet-of-things/exercises/9LEDs $ ../../support/magic.sh -D

docker: Error response from daemon: error gathering device information while adding custom device "/dev/ttyUSB0": no such file or directory.

ERRO[0000] error waiting for container: context canceled it means you haven’t got access to the serial port that your ESP32 is connected to. (Perhaps the port isn’t available, or on Linux perhaps you’re not in the dialout group?)

PlatformIO has lots of nice features for IoT development, including managing the toolchain installation process and library installation. Instead of fiddling with your IDE or persuading our Docker image to run, if you have the correct platformio.ini configuration file in your project the system will manage everything for you.

For example, to build and burn the HelloWorld example try this:

platformio.ini, e.g.: cd the-internet-of-things/exercises/HelloWorldpio run -t upload -t monitorIf you hit port-related trouble, see above.

Note that you can use the CLI in a terminal from within VSCode by navigating to the PlatformIO IDE home within code and then selecting PlatformIO Core CLI. If you like the command line this gives you the best of both worlds, a powerful editor with autocomplete etc. plus a quick way to run compiles and scripts and etc. without having to resort to the mouse!

We are using a breadboard (also known as proto-board) to assemble our circuits in the labs. Breadboards allow components and wires to be pushed into holes and connected without soldering. (In Hardware 1 you use one to experiment with voltages, resistors, and measuring a circuit’s performance with a multimeter. These are important skills for IoT device prototyping, and will be useful for your project work later in the term.)

To recap, a breadboard has some connections between holes already made – shown in this diagram:

NOTES:

Take your ESP32 feather device and carefully lay it in place on the breadboard over the holes indicated:

Now push gently on the edges of the feather to insert the pins into the holes – you are aiming to keep the device parallel to the breadboard as it goes into the holes, rather than push one side down and leave the other up. Keep moving around pushing in different places if it seems stuck – often new breadboards are a bit stiff at first. If the pins aren’t going in easily then check that they are all lined up above the holes correctly – if not you can bend them gently with a pair of fine pliers. You will need to use some force to push the device down onto the breadboard. To get an idea of how much force might be needed, take a jumper wire and try inserting that into a hole, then multiply that by 28. Try not to bend the header pins – if you do then use the pliers to return them to their correct orientation.

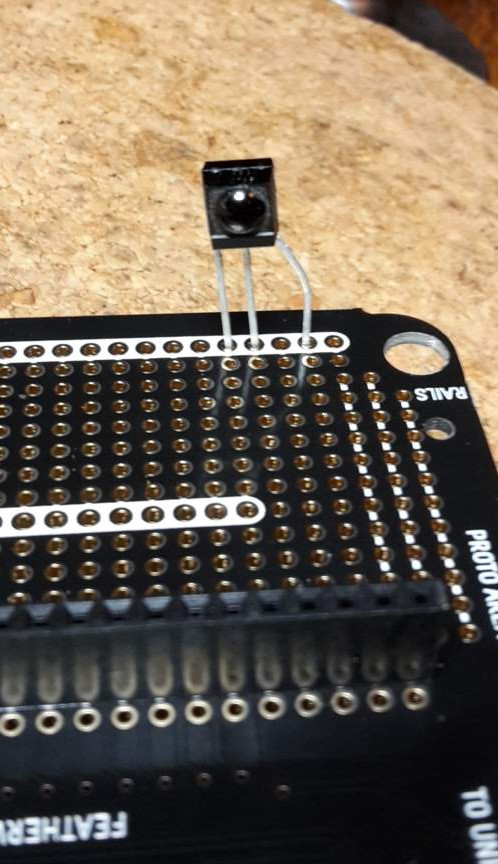

Once the feather device is inserted, add a LED, 120Ω (or thereabouts) resistor and push-button switch from your kit as shown:

Pay attention to the orientation of the LED – as it is a (light emitting) diode it will only work one way round. The longer lead of the LED is the anode and it connects to the ESP32 output pin – the shorter lead is the cathode and it connects to the negative or ground connection. Add jumper leads to complete the circuit as shown – colour codes help to make the circuit more readable:

The circuit pictured above can be represented as a schematic – this is a more abstract representation of the components and connections:

The trick here is that the LED is connected to pin 6 and the switch to pin 5, so we might usefully define these in our C code as int pushButton = 5, externalLED = 6;.

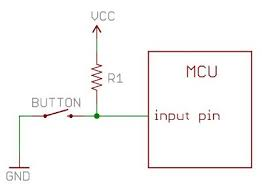

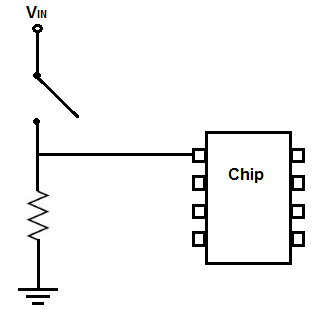

The code for this sketch should make use of the internal pull-ups inside the ESP32. Simply pass the “input pullup” macro to the pinMode command: pinMode(pushButton, INPUT_PULLUP) These are optional resistors that connect to +v and the input pin. These make the inputs high, unless they are connected to ground. See this diagram:

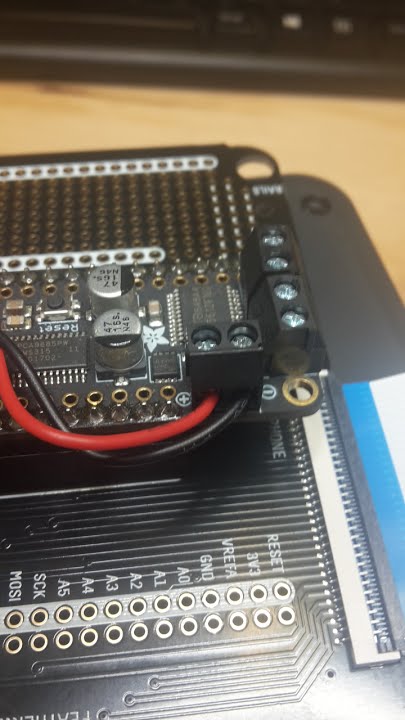

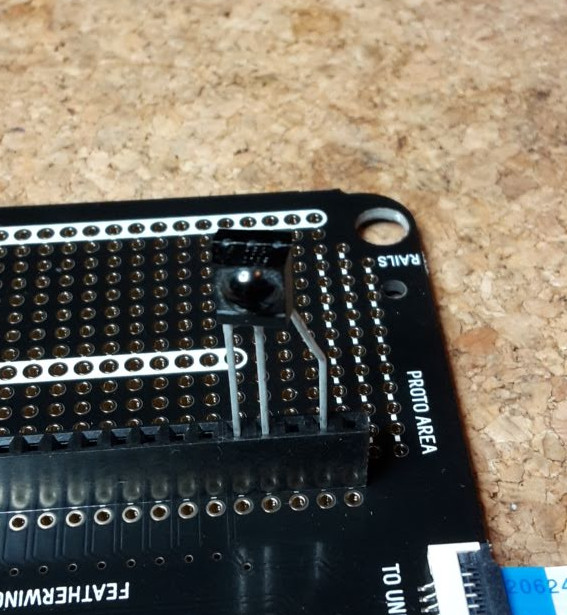

Below are some pictures of an example board. Note that this one is using the ESP32 with “stacking headers” (additional sockets on top of the board) so we can use both the breadboard sockets or the stacking sockets to connect to.

You’re now ready to work with the LED and switch in firmware to answer Exercise 2 as above.

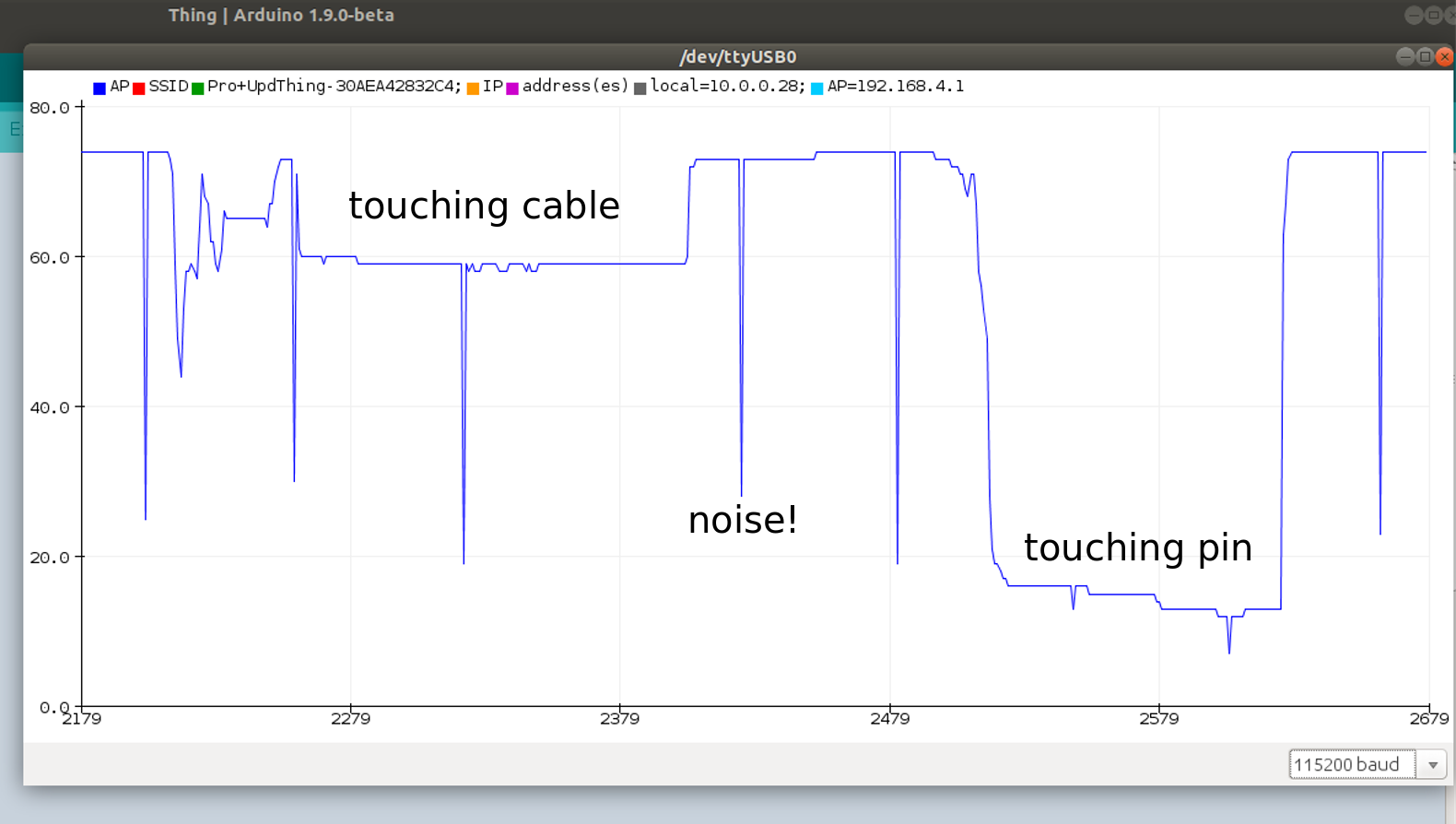

When you’ve finished your version, have a look at exercises/Thing/sketch/Ex02.cpp. (To run exercise 2, set LABNUM to 2 in sketch.ino.) How similar is it to yours? Does it work better, or not as well? If you try running it on your hardware, you’ll likely find that the switch isn’t very responsive and often needs pressing several times before it works. Why might that be?

All will be revealed… :)

Where have we got to? By now you should have:

In this chapter we do two things:

There’s lots to do: crack on!

When we teach computing to beginners, we teach how to build something from the ground up. A new programming language: hello world. A machine learning method: the pseudo code for its algorithmic expression. This is necessary and valuable, but it hides a crucial fact about software development in the 2020s: we almost never build anything significant without starting from the work of tens of thousands of other people. These people form communities, and communities adopt and evolve tooling and workflows to create ecosystems. Choosing which ecosystems from the work of our predecessors we try to hitch a ride on is often one of the most influential decisions in any development project.

I think it fairly safe to say that few people would have predicted, around the turn of the millenium, that one of the most significant advances in embedded electronics and physical computing would be driven by “the development of contemporary art and design as it relates to the contexts of electronic media” (Barragán 2004). Starting in the early naughties, the Arduino project (Monk 2013; Banzi and Shiloh 2014b; Arduino 2017) had by the mid tennies come to provide the standard toolkit for experimentation in low power circuits. Today the ecosystem that resulted is first port of call for code to drive the sensors and actuators that give the IoT its interface to the physical world. And as we saw in the previous chapter, the Arduino IDE also provides us with one of the easiest ways to get started programming the ESP32.

We’ll start this chapter by looking in a little more detail at the various contributions that the project has made, and have a bit of a sniff around its development environment and the type of (C++) code it supports.

Arduino can refer to any or all of:

Arduino C++ refers to preprocessing, macros and libraries that are available via the IDE. (C++ is a medium-level language layered on the C (low level) systems programming language.) Over the last decade or so there has been a quite massive community of open source developers contributing to an ecosystem of code and documentation and forum posts and github repos and etc. which has made Arduino C++ a very productive environment for IoT development.

Remember that the ESP32 is not an Arduino (or even an AVR-based device), but there is a compatibility layer that interfaces it to the Arduino IDE. This makes lots of code for sensors and actuators and etc. magically available for the ESP.

Having started out as a service project for arts and design students in Ivrea, Italy, in the early 2000s (who were using microcontrollers to create interactive exhibits) it brought cheaper hardware than the alternatives (based on Atmel’s AVR chips), and added an IDE derived from work on Processing and Wiring (Barragán 2004; Reas and Fry 2007). Some millions (or 10s or 100s of millions) of official and unofficial boards are now in existence, and it remains the most popular platform for embedded electronics experimentation.

The Arduino IDE is a Java Swing desktop app that preprocesses your code (or “sketches,” suffix .ino). Arduino’s programming language is C++ with lots of added libraries and a bit of code rewriting. It runs the GNU C++ toolchain (gcc) as a cross-compiler to convert the Arduino C++ dialect into executable binaries. The IDE includes a compilation handler that converts sketch firmware .ino files into a conventional .cpp file (poke around in /tmp or equivalent to see what the results look like).

Binaries are then uploaded (“burned,” or “flashed”) to the board using various other tools (in our case this is usually a Python tool from Espressif). The IDE then allows monitoring of serial comms from the board, and provides access to libraries and example code. If you ask it nicely it will plot graphs for you, e.g. of the output of a microphone:

As we noted in chapter 2 the IDE is pretty basic, and you may well want to move on to more sophisticated tools later on. It is well worth getting to know it to start with, however, as it is typically the fastest way to get started with new hardware, and the fastest way to find a working example from which to develop new functionality.

In sec. 2.1 we saw two very different ways to define the IoT: as a nascent world robot, or as the simple consequence of increases in computational power and network connectivity in microcontroller hardware. This section gives a bit more context to the origins of the field on the one hand, and the hardware space of IoT devices on the other.

When we look back at the antecedents of the IoT, it becomes clear that the field represents a crossover point between many earlier (and ongoing) areas of computer science and software engineering. Related and predecessor fields include:

If you understand roughly what each of these terms means then you have a good basis for understanding what the IoT means (and of being able to distinguish the marketing speak from the actuality of possible technology options).

The term Internet of Things was coined by Kevin Ashton in 1999 for a presentation at Procter and Gamble. Evolving from his promotion of RFID tags to manage corporate supply chains, Ashton’s vision of the Internet of Things is refreshingly simple: “If we had computers that knew everything there was to know about things — using data they gathered without any help from us — we would be able to track and count everything, and greatly reduce waste, loss and cost. We would know when things needed replacing, repairing or recalling, and whether they were fresh or past their best.” (Ashton 2011)

Of course, devices had been ‘on the internet’ for several years before this, from at least 1982 in the case of a drink vending machine at Carnegie Mellon University in Pittsburg (Machine, n.d.). Using a serial port converter, the status lights of the front panel of the machine were connected to the Computer Science departmental computer, a DEC PDP-10 (for which today’s equivalent cost would be around $2 million!). The Unix finger utility was modified to allow it to report the level of coke bottles and whether they were properly chilled. Internet users anywhere could type “finger coke@cmua” and read the status of the machine. (It is notable that the world’s first IoT device was enabled by openly available Unix source code.)

A camera pointed at a coffee-pot in Cambridge’s computer science department was video-streamed on the internet from 1991, and when control from the web to the camera was established in 1993 the first webcam was born (Fraser 1995). Presaging very contemporary anxieties, a toaster had been connected to the internet in 1990 at the INTEROP exhibition (Romkey 2017), and nearly caused a strike as preparing food was an activity allocated to unionised labour. However it wasn’t until 2001 that a toaster became an IoT device in a modern sense, able to dynamically query a webservice for the current weather forecast, and then burn the appropriate pictogram onto a piece of toast (Ward 2001).

So we can see that from the earliest days of IoT (when it was often called pervasive or ubiquitous computing) our current concerns around open source, security (Denning and Denning 1977) and human obsolescence were already recognised.

“It was the best of times, it was the worst of times, it was the age of wisdom, it was the age of foolishness, it was the epoch of belief, it was the epoch of incredulity…” (Dickens 1877)

We do indeed live in an epoch of belief and incredulity, with feverish hype of IoT widespread across the electronics and computing industries. Want a £400 wifi connected juicer that locks you into proprietary ingredient pouches anyone? Meanwhile ‘policy bloggers’ burble excitedly about techno-utopias such as smart cities that eliminate traffic — “Imagine a city with no traffic at all” (O’Muircheartaigh 2013). Security researchers are desperately trying to warn us of the dangers of letting our personal data leak out from our devices (Sarthak Grover and Feamster 2016). After FitBit users’ personal exercise data was exposed publicly (Loftus 2011) the company solemnly announced “We have also updated our default settings for new users for activity sharing to ‘private.’”